Building a synthetic data feed to AWS S3

Building a data feed from a query is a common use case of crul. In this example, we'll deploy a crul query that builds a synthetic data set using a REST API to seed values, as well as fully synthesized values for personally identifying or sensitive values. We'll configure this data feed to write to AWS S3 on a scheduled interval.

Use case

We want a query that will generate a semi-synthetic data set of personal health related data. For obvious reasons, we want this data set to be anonymized. We need a name, person_id, phone, heart_rate, temperature, and facility. Our facility value should be randomly selected from a list of hospitals in the New York area, which we can retrieve using a public dataset/API.

The query

Here is the entire query, we'll walk through it step by step next.

api get https://health.data.ny.gov/download/7a62-tptu/application%2Fx-zip-compressed --serializer "zip"

|| filter "(name == 'All_Hospital_Citations_Q1_2023.csv')"

|| api get https://health.data.ny.gov/download/7a62-tptu/application%2Fx-zip-compressed --serializer "zip" --unzip.path $path$

|| table Name

|| unique Name

|| rename Name facility

|| synthesize facility

--prompt "add a column called name which is a first name and a last name,

a column called person_id containing a guid,

a column called phone containing a phone number of the format (111)-111-1111,

a column called heart rate containing a value between 45 and 100,

a column called age containing an age between 18 and 100 (if age is greater than 60, subtract 10 from the heart rate column value),

a column called temperature containing a temperature between 97 and 99, rounded to the second decimal place"

--count 1000

Building our query

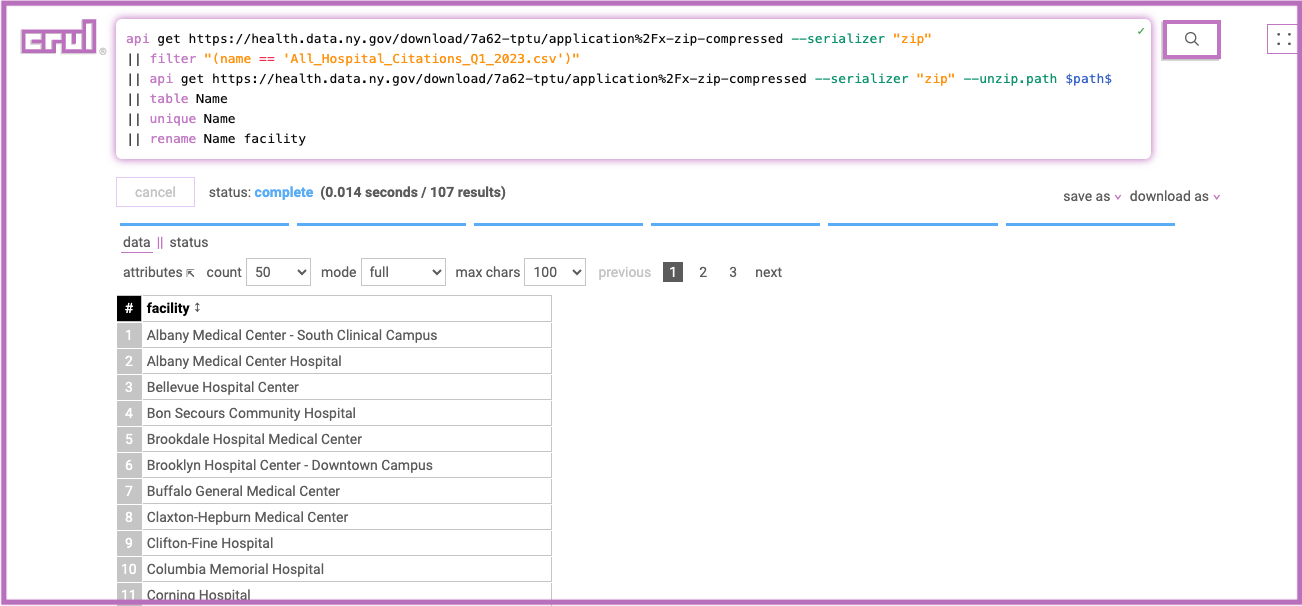

Getting a list of hospitals in New York

Our first step will be to contruct a set of hospitals in the New York area that we can select from for the "real" part of our synthesized data set.

api get https://health.data.ny.gov/download/7a62-tptu/application%2Fx-zip-compressed --serializer "zip"

|| filter "(name == 'All_Hospital_Citations_Q1_2023.csv')"

|| api get https://health.data.ny.gov/download/7a62-tptu/application%2Fx-zip-compressed --serializer "zip" --unzip.path $path$

|| table Name

|| unique Name

|| rename Name facility

We'll use a publically available data set that is stored in a zip file. With the api command's --serializer flag set to zip, we'll extract the metadata from the archive to get a list of available files. We then use the filter command to select a specific file, then use the api command again with the --serializer flag set to zip and the --unzip.path flag set to the token $path$. The token $path$ will be replaced by the value in the path column(s) from the previous filter stage. See the Fetch a Zip Archive and Scan Extract docs for full details.

The last few stages will use the table command to only keep the Name column, the unique command to remove any duplicates, and the rename command to rename the Name column to facility.

Great! Now we have a list of all the New York hospitals included in this data set, we can use this in our next synthesizing stage to include some real values.

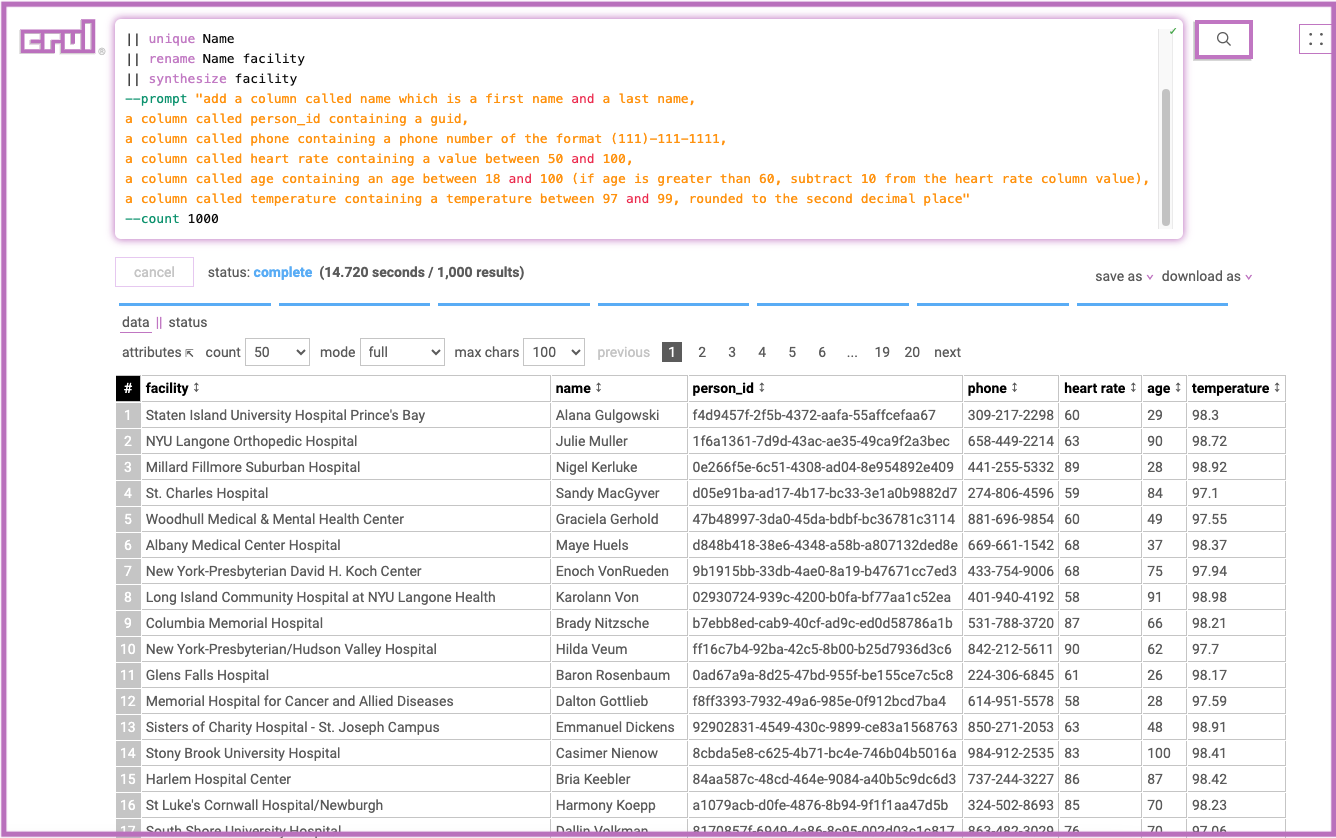

Synthesizing a data set using real seed values and a prompt

The synthesize command can generate fully synthesize values using the --prompt flag, and/or randomly select values from the previous stage through arguments. See the Synthetic Data Generation docs for full details.

In this example we'll use both approaches.

...

|| synthesize facility

--prompt "add a column called name which is a first name and a last name,

a column called person_id containing a guid,

a column called phone containing a phone number of the format (111)-111-1111,

a column called heart_rate containing a value between 45 and 100,

a column called age containing an age between 18 and 100 (if age is greater than 60, subtract 10 from the heart_rate column value),

a column called temperature containing a temperature between 97 and 99, rounded to the second decimal place"

--count 1000

We'll provide the facility column as an argument to our synthesize command. This will randomly select values from the facility column from the previous stage of results.

We'll also provide a natural language --prompt describing the data set we would like to synthesize, with some details about format, column names, and special rules. For example: if age is greater than 60, subtract 10 from the heart_rate column value.

Finally we set the --count flag to 1000, which is the number of rows we would like to synthesize.

Note: prompts can take some iteration to get right. If you have a prompt that generally works but occasionally fails, you can increase the --retry value to configure the number of times the synthesizing operation will be attempted.

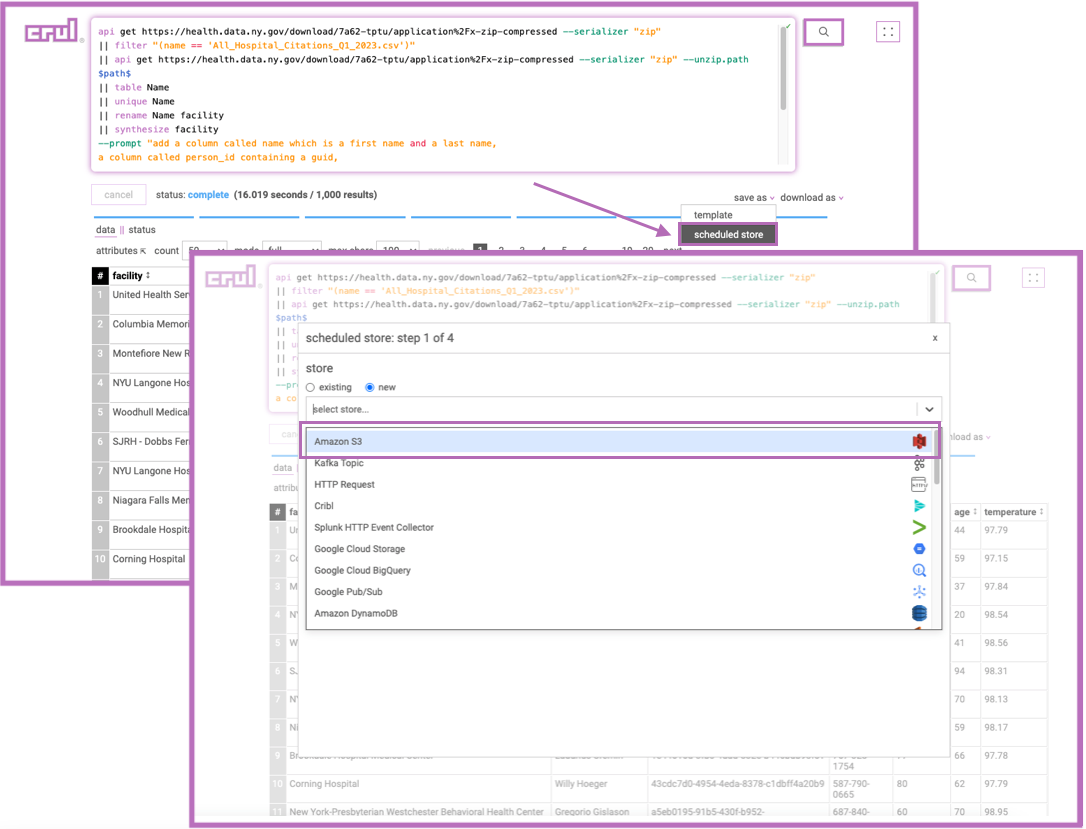

Configuring a Store, Scheduling and Sending Incremental Diffs to S3

We now want to export this data set to AWS S3 and schedule it to run on a 1 hour interval.

Since this diff/export/schedule step is so common, we can use crul's scheduled export wizard. Let's walk through each of the steps.

Step 1: Select a store or create a new one

The first step of the scheduled export wizard is to select a store, or configure a new one. Let's assume we are going to create a new one. If you have an existing store (possibly created by the export wizard previously?) you can simply select it from the dropdown.

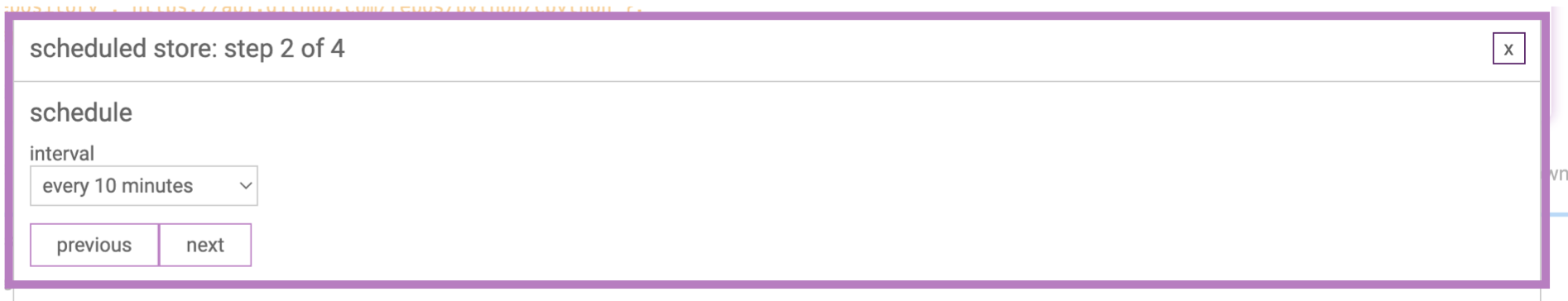

Step 2: Select a schedule interval

Select a time interval for this query to run on. It will run shortly after creation and then on the set interval.

CAUTION! If you pick a short interval (less than 5 mins) you may run into issues with the crul cache. Ensure that stages in your query that you do not wish to be cached set the --cache flag to false. Example: api ... --cache false. When in doubt, set --cache false on all stages.

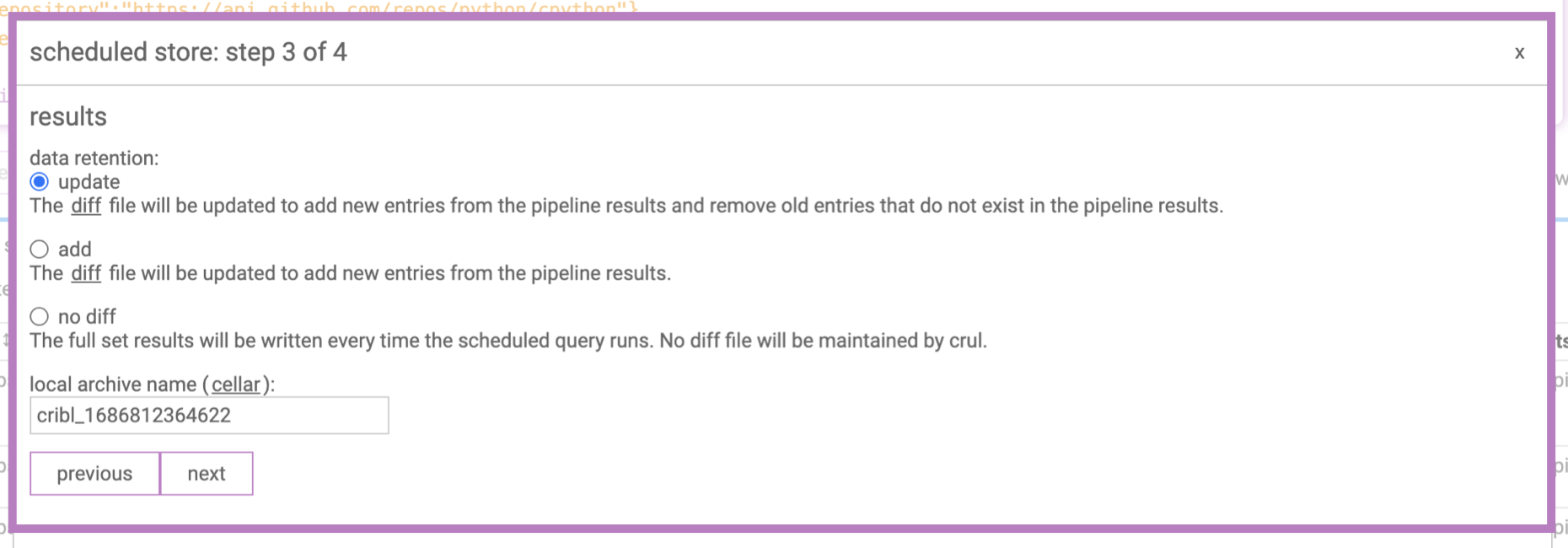

Step 3: Select a diff strategy

There are a few diff strategies. We can select no diff for this data feed as we want to send a fresh data set each time.

Rotating diff

The rotating diff is the most commonly used diff strategy. It works by comparing the current diff file to the current results, then returning the results that do not exist in the diff file. Finally, it overwrites the diff file with all the current results, including ones that already existing in the diff file.

Content of old diff:

{"col": "value1"}

{"col": "value2"}

Results prior to diff command:

{"col": "value1"}

{"col": "value2"}

{"col": "value3"}

Results after diff command:

{"col": "value3"}

Contents of diff file after diff command:

{"col": "value1"}

{"col": "value2"}

{"col": "value3"}

Appending diff

Store ALL results and append new ones. This can lead to big, growing, diff files and is NOT recommended unless the results sets are small and/or your are cleaning the diff file regularly of older entries.

No diff

Send the whole set of results each time.

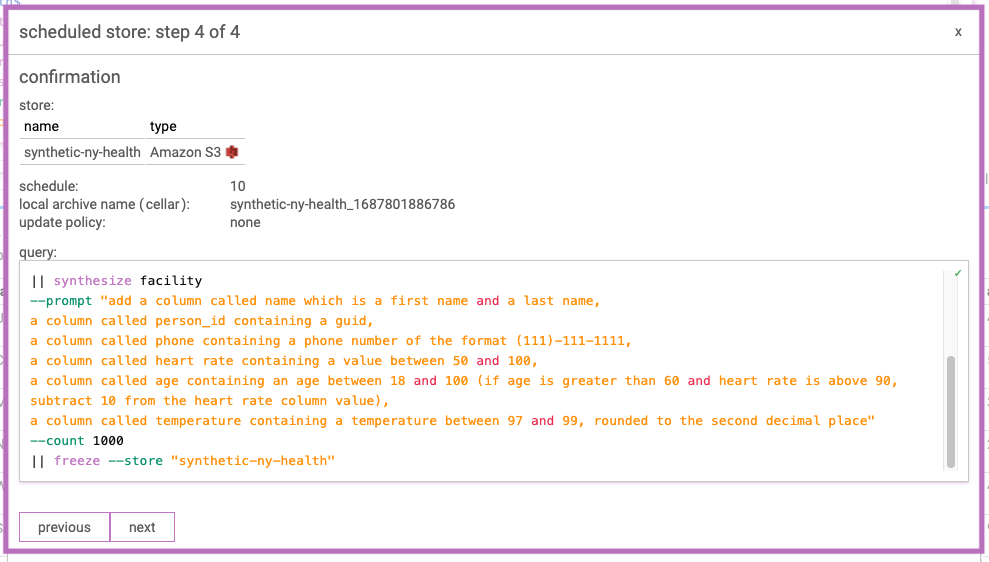

Step 4: Confirm and deploy!

Check that the details look correct and hit Submit to deploy the data feed. It will start running on a schedule.

Note that crul must be continually running for the scheduled queries to run. We recommend using the docker image as a long running service when creating data feeds.

Summary

We've now seen how simple it is to convert a query that synthesizes data sets into a data feed, to then populates one of 30+ destinations on a schedule, while optionally maintaining a diff to ensure that only new result are sent over.

Any crul query can be turned into a feed using these steps! Have a web page that you would like turned into a data feed? No problem! Need to turn a REST API into a data feed? We got you!

Advanced

Looks like that wizard added a stage to my query - how does it work?

With the exception of the scheduling step, the wizard really only adds up to 2 stages to our data retrieval query. In this case, the diff stage is skipped as we selected no diff.

The added stage is the freeze command, which delivers our data to a configured store. See exporting results.

Once you understand these commands, you can construct powerful data feeds yourself and schedule them manually, or just use the wizard!

Join our community

Come hang out and ask us any questions. Many of the features and fixes in crul come from our user requests!